bit (binary digit)

What is a bit (binary digit)?

A bit (binary digit) is the smallest unit of data that a computer can process and store. A bit is always in one of two physical states, similar to an on/off light switch. The state is represented by a single binary value, usually a 0 or 1. However, the state might also be represented by yes/no, on/off or true/false. Bits are stored in memory through the use of capacitors that hold electrical charges. The charge determines the state of each bit, which, in turn, determines the bit's value.

Although a computer might be able to test and manipulate data at the bit level, most systems process and store data in bytes. A byte is a sequence of eight bits that are treated as a single unit. References to a computer's memory and storage are always in terms of bytes. For example, a storage device might be able to store 1 terabyte (TB) of data, which is equal to 1,000,000 megabytes (MB). To bring this into perspective, 1 MB equals 1 million bytes, or 8 million bits. That means a 1 TB drive can store 8 trillion bits of data.

How a bit works

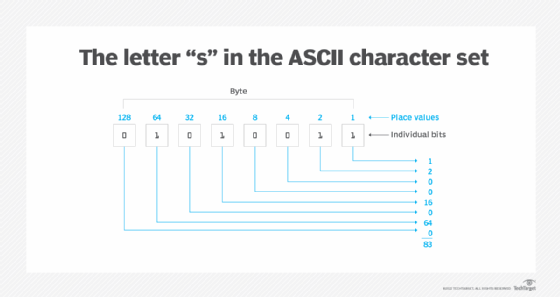

Each bit in a byte is assigned a specific value, which is referred to as the place value. A byte's place values are used to determine the meaning of the byte as a whole, based on the individual bits. In other words, the byte values indicate what character is associated with that byte.

A place value is assigned to each bit in a right-to-left pattern, starting with 1 and increasing the value by doubling it for each bit, as described in this table.

| Bit position (right to left) | Place value |

| Bit 1 |

1 |

| Bit 2 |

2 |

| Bit 3 |

4 |

| Bit 4 |

8 |

| Bit 5 |

16 |

| Bit 6 |

32 |

| Bit 7 |

64 |

| Bit 8 |

128 |

The place values are used in conjunction with the bit values to arrive at the byte's overall meaning. To calculate this value, the place values associated with each 1 bit are added together. This total corresponds to a character in the applicable character set. A single byte can support up to 256 unique characters, starting with the 00000000 byte and ending with the 11111111 byte. The various combinations of bit patterns provide a range of 0 to 255, which means that each byte can support up to 256 unique bit patterns.

For example, the uppercase "S" in the American Standard Code for Information Interchange (ASCII) character set is assigned the decimal value of 83, which is equivalent to the binary value of 01010011. This figure shows the letter "S" byte and the corresponding place values.

The "S" byte includes four 1 bits and four 0 bits. When added together, the place values associated with 1 bits total 83, which corresponds to the decimal value assigned to the ASCII uppercase "S" character. The place values associated with the 0 bits are not added into the byte total.

Because a single byte supports only 256 unique characters, some character sets use multiple bytes per character. For example, Unicode Transformation Format character sets use between 1 and 4 bytes per character, depending on the specific character and character set. Despite these differences, however, all character sets rely on the convention of 8 bits per byte, with each bit in either a 1 or 0 state.

The term octet is sometimes used instead of byte, and the term nibble is occasionally used when referring to a 4-bit unit, although it's not as common as it once was. In addition, the term word is often used to describe two or more consecutive bytes. A word is usually 16, 32 or 64 bits long.

In telecommunications, the bit rate is the number of bits that is transmitted in a given time period, usually as the number of bits per second or some derivative, such as kilobits per second.

See also: most significant bit or byte, bitwise, bit stuffing, bit rot, bit slicing, qubit, how many bytes for and classical computing.