network performance monitoring (NPM)

What is network performance monitoring (NPM)?

Network performance monitoring (NPM) is the process of measuring and monitoring the quality of service of a network. NPM helps network administrators gather network data, measure performance variables and identify potential issues or risks.

NPM tools help network administrators manage linked devices and gather metrics from servers or other linked devices. The tools then analyze the collected data to find any bottlenecks or congestion points so the network can increase its throughput once fixed.

Some NPM tools have automated capabilities, which simplify the process. Automated NPM tools can create reports and alert users when issues occur. Ideally, this feature should enable network admins to address issues before they become bigger problems.

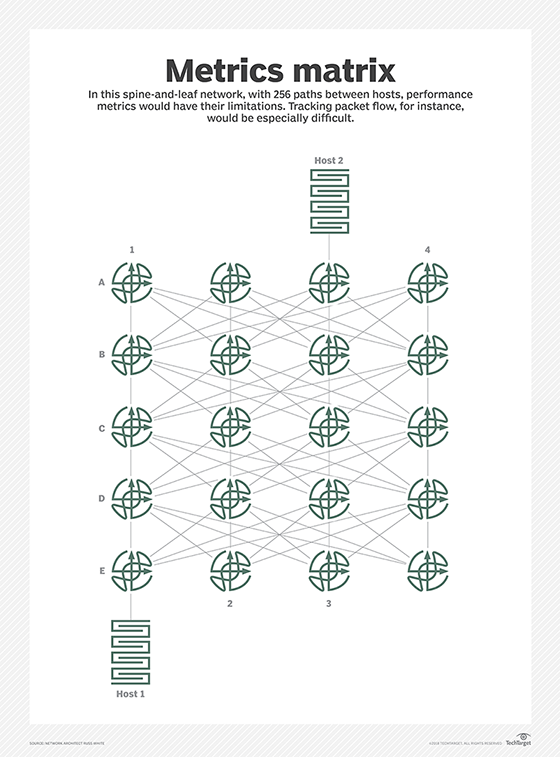

Organizations with strict reliability requirements have driven the need for NPM tools. As network architecture grows in complexity and application delivery becomes more time-sensitive, the need for NPM has increased. Today's network monitoring tools must have a mix of scalability, capability and usability.

Importance of network performance monitoring

NPM enables network administrators to optimize network performance, especially in organizations with strict reliability requirements and complex network architectures. Network administrators can configure tools to monitor the performance of networking components, like servers, routers, switches and virtual machines (VMs).

Bottlenecks negatively affect the user experience (UX). NPM must consider what UX will be like by monitoring the network for bottlenecks or congestion and provide better throughput.

How network performance monitoring works

NPM collects data from multiple sources. It monitors flow data, Simple Network Management Protocol (SNMP) and packet capture:

- Flow data. Flow data generates from network devices. This data provides information about which devices are communicating and how long and how often they communicate. This should also give information on how much data transfers through the network.

- SNMP. SNMP is a widely used protocol that monitors and manages network devices. Many network and endpoint devices support SNMP, such as routers or printers. SNMP can continuously monitor network changes or status in real time.

- Packet capture. Packet capture is a tool that captures packets that cross a specific point in a network. The network copies and stores captured packets so network admins can analyze them. Network admins use packet capture to monitor network health, network application performance, and security and incident response. Packet capture also helps troubleshoot service issues and aids with network capacity planning.

NPM tools also typically provide visualization and reports in a customizable dashboard. Network admins should be able to collect and view flow data, SNMP support and packet capture within the tools. If the tools detect any issues, admins should be able to isolate and analyze the issue quickly.

Network admins can use NPM on premises, in the cloud or in hybrid environments.

Network performance monitoring metrics

NPM tools keep track of errors, bandwidth, throughput and latency:

- Errors. Errors refer to the percentage of errors received due to network issues, such as packet loss. Packet loss refers to the number of packets that were sent successfully but didn't reach their destination.

- Bandwidth. Bandwidth is a measurement of the maximum amount of data that can transfer through a network over a specified time period, measured in bits per second. Bandwidth is a measure of capacity, as opposed to a measure of speed.

- Throughput. Throughput is the measure of how many units of information a system can process in a given amount of time. Throughput, as opposed to bandwidth, is a measure of speed because it relates to aspects such as response time.

- Latency. Latency is a measure of how much time it takes for a data packet to travel from one designated point to another. Latency is also a synonym for delay. This measurement, ideally, should be as close to zero as possible.

Network performance monitoring benefits and challenges

Benefits of NPM include the following:

- Detects and removes any unusual behavior in a system before they become bigger problems, thus minimizing risk.

- Detects errors and latency in network traffic, which lead to a better end-user experience.

- Optimizes performance, identifies problem areas and collects performance data.

- Identifies malicious activity, as well as tracks and flags unusual behavior and network security threats.

- Offers more flexibility and control with private cloud models.

- Makes public clouds more cost-effective.

- Increases efficiency and scalability in virtualized and automated networks.

Challenges of NPM include the following:

- Some tools can't access virtualized layers, which adds complexity.

- It isn't always simple to track automated changes.

- VMs sometimes can't communicate properly to relay necessary information, which means network teams must monitor the individual VMs and the hypervisor.

- Virtualized and automated networks can mask performance issues.

Network performance monitoring tools

Some NPM vendors target smaller businesses with traditional NPM tools. However, most vendors address the problems larger businesses face due to an increased demand for reliable network services.

Examples of NPM vendor tools include the following:

- Cisco DNA Assurance. Cisco DNA Assurance is part of Cisco's intent-based networking architecture called Digital Network Architecture. Cisco DNA Assurance monitors networks and devices, as well as clients, applications and services. Organizations need an end-to-end Cisco architecture to get the most out of the tool, but it can still collect telemetry information from non-Cisco endpoints, devices and applications.

- DX NetOps. Broadcom's DX NetOps is a NPM tool that monitors, alerts and provides analytics. DX NetOps can capture critical performance metrics in network infrastructures with SNMP, NetFlow, representational state transfer, streaming telemetry, and other network and application measures. The tool can analyze collected information and display the health of network infrastructures, software-defined data centers and cloud services. The product suits multivendor networks equipped with legacy and modern network components.

- Mist AI and Cloud. Juniper's Mist AI and Cloud is a cloud service that enables customers to monitor Mist access points, regardless of the location of deployment. Mist AI and Cloud uses artificial intelligence (AI) to guarantee network assurance, identify anomalies, automate event correlation, enable root cause analysis and steps to remediate issues, and more.